When trying to define DevOps, one of the first things that people will read about is consistency in delivery. That is because DevOps prescribes a specific set of processes that development and operations teams should be following when moving code from requirements/stories into production environments with continuous deployment pipelines.

In an otherwise uncertain world, everyone should be looking for some consistency in their development and deployment processes. Transitioning traditional software development processes into a DevOps process flow will bring exactly that.

Since DevOps is a part of agile, through successful agile adoption and subsequent successful DevOps adoption organizations can bring a consistent flow or lifecycle to their products and services. Let's spend a bit of time talking about flow and lifecycle to understand how it can enable your business to accelerate.

Related Articles

- How To Gain DevOps Technology Experience

- What Is A DevOps Transformation - Part 4

- The Untold Secrets Of DevOps Release Management!

What is the DevOps lifecycle?

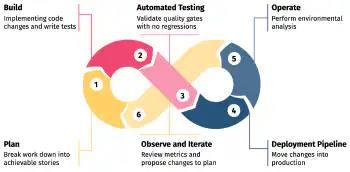

DevOps lifecycles can be described in terms of different stages of continuous development including integration, testing, deployment, and monitoring. The development of software in DevOps requires the development of a complete DevOps lifecycle for every change. You can visualize DevOps flow as a concentric loop that continues forever. DevOps processes are not limited to the scope of a single project or single initiative.

Although it appears to run sequentially, the DevOps lifecycle is divided into six phases representing the processes, capabilities, and tools required for development and operation. During these phases, the team collaborates and communicates in order to ensure alignment, velocity, and quality.

Plan

DevOps teams should embrace agile approaches for speed and quality. An agile approach consists of iterative methodologies that help teams break down work into small segments for more incremental value.

In a traditional software development environment, something like Waterfall is going to be used as the planning tool. Teams are going to try to be smarter than the problems they are trying to solve and build in a buffer, almost like they have a crystal ball at their disposal.

An Agile development process is going to plan out work into much smaller stories which allow for continuous learning to occur. The team will also break down work to accommodate automated testing, DevOps automation, infrastructure as code, observability, and much more to ensure they are being provided a consistent feedback loop.

Consistency in your planning methodology is a key to great flow. Prescribing to a consistent Agile methodology for delivery will give the team a solid foundation to lean back on when looking for stable footing. The more consistency that you can provide your delivery teams, the more consistency that business will get as an added bonus.

Always being ready for a production environment is the primary goal of an effective DevOps process flow. Any planned change should be scaled in a way that relatively small segments of work can be fully completed and released to a production environment rapidly ensuring a feedback loop gets kicked off quickly.

Why am I Struggling with Planning?

This is an area that I have seen most organizations struggle with when adopting DevOps or agile practices. There is a lot of dopamine that gets released when delivering software with a big bang, and it can seem fairly mundane to make a cultural shift by adopting new processes which seemingly slow down delivery.

Effective planning will not only speed up delivery but will also make delivery more consistent. All of the time and energy that was wasted on any critical step in the process not being done perfectly will be replaced by iteration following a series of smaller successes and failures. The software product your company produces will achieve a higher degree of stability, reliability, security, and consistent expectations.

Planning will also lead to improved collaboration on your teams. By circulating a plan that everyone can digest, provide feedback, and help improve; everyone on the team will have a voice in the delivery process. While you may feel like you are being effective delivering by planning in real time, I can assure you that you are not as effective as teams who spend a great deal more time planning.

Fully embracing structured agile practices in a meaningful way can help solve these issues. A team that has failed to adopt agile practices is going to continue to wonder why its planning is ineffective. Stakeholders will also be left in a chaotic place that ruins good communication and strategic planning creating a toxic culture.

Build

Once stories are planned, the implementation process will take hold. The technology team(s) will start implementing code changes that meet the criteria for the stories. Automated testing will also be written to validate the code changes.

These changes will be committed to a code repository where continuous integration ensures any variety of standards and best practices are met. Code scanning should be done to look for known security issues, and known bad practices like queries inside of loops, keys or passwords, and many more. This will be an initial quality assurance check of many.

Giving proper time and space for building is critical in a DevOps process flow. Software delivery is a complex process that requires time, deep thought, and focus and there is no way to devise a shortcut. A DevOps group will have principles that they are trying to adhere to which drive overall quality. They will also need to think through the longevity of their solution.

A DevOps team will also need to conceptualize how their changes are going to fit into an overall release process. A seemingly simple change may touch many parts of an active system which could introduce regressions. Some processes will need to go through a review process which ends up with the approved code being merged into the main CD workflow.

As a business leader, your cost savings do not originate from fewer people working harder or longer in this step of the lifecycle. Your cost savings come from the predictability of delivery. Each team member holds each other accountable to specific quality standards meaning you will end up with less rework in the long run.

Automated Testing

The most critical tool in the development pipeline is test automation. The lifeblood of any DevOps toolchain is monitoring and feedback. As development teams are writing their code, they are also writing automated tests.

The next quality gate past code scanning will be a variety of automated testing steps. Unit tests, integration tests, functional tests, etc. will be run to ensure that features are meeting specifications along with validating regressions that were not introduced into existing features.

A traditional development team would progress their code changes through multiple testing environments with quality assurance engineers manually validating each component that has changed along with their dependencies. This is extremely costly and error-prone.

By moving testing with code, a team archives a stance of constant monitoring and feedback in relation to how they are delivering code along with more stringent controls around what makes it into production environments.

Continuous testing also allows an organization to combine speed with user feedback to get value into the market more quickly. As users are able to provide more and more detail through their various communications channels, teams are able to adapt their new code to meet customer demand. While making these pivots, software quality cannot suffer. DevOps processes like continuous integration, continuous testing, and continuous deployment help make that a reality.

Without continuous testing, organizations that are struggling to realize the benefits of DevOps will continue to suffer from costly delays, poor customer feedback, and operations teams that are overworked with low morale.

Continuous testing is also a great way to bring development and operations teams closer together during your DevOps adoption journey. In a more traditional SDLC, code changes will be pushed out to a production environment and an operations team will be notified of the changes. This is not normally a smooth process.

By focusing on more DevOps-oriented processes, development and operations teams are together in a single group and everyone is reacting to alerts and outages. With guidance from a more operationally focused thought process, more robust solutions can be developed with accompanying tests creating a more complete solution.

Deployment Pipeline

Once continuous testing is completed, code will move down the continuous deployment pipeline and make its way onto compute. This could be a preview environment or directly into production. For a business, this is the "payout" moment of a DevOps procedure stream. Once approved code is released into production, a business can start to realize the value of its investments.

A series of steps will be orchestrated in code to run infrastructure such as code changes, data updates, metadata updates, software updates, governance updates, etc. to bring the environment into its new consistent state. This is generally the most visible use of DevOps tools in the business.

Every organization is going to work a little bit differently prior to code making its way to a production environment. A series of testing environments may be between a code repository and production. I would urge any business to not rely on manual validation as part of their pipelines but to use these environments to do user testing with feature flags being enabled or disabled.

Many different models are used here, but the two most recognizable are canary deployments and blue/green deployments.

Canary Deployments

A canary deployment will take a small subset of servers and release new features to them. If there is any problem with the release, that small subset of servers is rolled back immediately. If it is successful, a larger number of servers will start their deployment process until a critical mass is reached.

While this deployment approach is occurring, users will be sent to either newly deployed servers or to servers yet to be updated. Development team members will need to be aware of this as their changes could break backward compatibility with the previous release.

This method is named after the phrase "canary in the coal mine". In a mine, before our more advanced technological achievements as a society, old-time miners would bring a canary down into the coal mine, and if toxic gasses were to be released or air quality would suffer, the canary would feel the effects first alerting the miners.

Blue/Green Deployments

Unlike a canary, Blue/Green focuses on getting a set of servers deployed and then swapping them into service while taking the previous release out of load balancer rotation. High-level validations can then be done to ensure stable performance and reliability before fully terminating older instances. If there is a problem, the previous fleet of servers can be put back into rotation and newly deployed servers can be stopped or investigated.

Operate

In a traditional software development process, operating an environment is generally fairly cumbersome and stressful. As issues arrive, there is pressure to get things back online and run post moretems to figure out the root cause of failures.

Adopting continuous delivery practices as part of your overall DevOps processes means that operations is part of everyday life. Small incremental changes are making their way out to production and as operational activities present themselves, DevOps practices would dictate that those activities should be automated.

By bringing the entire delivery flow into code changes instead of utilizing manual operators, organizations can limit their spending on resources that are not building new value for the market. Instead, they can retrain and utilize those resources to their fullest potential.

Observe and Iterate

Quickly find and solve technical problems impacting the functionality of products. Automate alerting the team of changes in your organization to prevent the interruption of services.

IT Operations would traditionally be waiting for alarms to go off and jump in like superman to fix whatever is broken. When implementing DevOps, observability is king. Being able to get continuous feedback from systems means that data-driven decision-making happens far more frequently.

When a team has data to make decisions, they are able to logically think through their next step while maintaining quality. When data is not available, guesses can be made to improve a bad situation and will generally make it worse.

Anything coming out of the continuous feedback loop should be taken back through Plan to ensure proper visibility and prioritization can take place. From there the team is able to send those changes through the DevOps lifecycle process flows to ensure effective changes are continuously being made to products that have a positive impact.

What are the 5 C's of the DevOps Process?

Below are the 5 C's of DevOps. The 5 C's are in support of building rapid application delivery.

Continuous Integration

Continuous Integration is a DevOps delivery practice where teams push code to centralized repositories where automated build and testing steps are kicked off against the incoming changes. From there, changes are validated for regressions, functionality, and interoperability.

In DevOps, CI/CD are cornerstone processes that enable the idea of flow. The idealistic result of Continuous Integration is to have code reach production automatically after committing.

You can learn more about Continuous Integration here.

Continuous Testing

Continuous Testing is a DevOps practice that enables frequent and consistent automated testing of changes. Developers spend time describing how their software should work with tests. Those tests are then run on every commit and in subsequent quality checkpoints in the delivery pipeline.

You can learn more about Continuous Testing here.

Continuous Delivery

Continuous Delivery is a DevOps practice that enables automation to push changes to different environments multiple times per day. As developers push their code to central code repositories, that code is first run through Continuous Integration and when Continuous Testing is passed, Continuous delivery will take hold and deploy that code into the next downstream environment.

You can learn more about Continuous Delivery here.

Continuous Deployment

Continuous Deployment is a DevOps practice focused on delivering code to production multiple times per day. A business will be able to net more value from its technology by ensuring that new features, products, and bug fixes are always available to their consumers as soon as they are committed to a code repository.

You can learn more about Continuous Delivery here.

Continuous Monitoring

Continuous Monitoring is a DevOps practice centered around feedback loops and data-driven decision-making. This has morphed in recent years into the overall idea of observability. This practice ensures that technology is complete when day 2 operations are accounted for and real data can be gathered to understand the performance characteristics of the deployed software.

You can learn more about Continuous Monitoring here.

Continuous Planning

Continuous Planning is the DevOps practice of ensuring that all work is prioritized and ordered through a methodology where execution becomes second nature. Work should be continuously analyzed, and defined, requirements gathered, and stories built, to promote good communication and alignment against a strategic vision.

You can learn more about Continuous Planning here.

Conclusion

Flow is important to DevOps. When implementing DevOps, organizations should pay very close attention to what their workflows look like. How you promote and implement continuous monitoring, iteration, continuous integration, and continuous deployment can have a huge impact on your adoption of DevOps and adoption of agile practices.