Building a devops pipeline is easy. Wire up a few components, write some scripts, glue on some tests, and add credentials for production, and voila you are DevOps-ing!! Well… not exactly. In my experience, this is where things usually start, but will quickly lead to new and additional churn in a delivery cycle which was never intended. Sometimes that churn goes completely unrecognized simply because the thought of doing DevOps is far better than the idea of not doing DevOps. Either way, do yourself a favor and take a step back to look into how intentional design, intentional component selection, along with care and feeding can get you into a spot where your pipelines work for you instead of you working for your pipelines.

In case you are wondering, "what is a pipeline in DevOps"? A pipeline in DevOps is centered around the idea of ensuring code is continuously delivered which in turn means the business is seeing a continuous flow of value. There are many DevOps pipeline tools on the market, but I want to take step back and discuss more of the planning and design side of things before diving directly into tooling.

Related Articles

- Streamlining Delivery: Agile DevOps Pipeline

- DevOps Deployments: Fast, Easy, And Fun!

- 6 Steps To Improve Consistency And Reduce Risk

Intentional Design

Deploying Jenkins, giving it some keys, giving it a job to do, and then finally pointing at production will yield a short-term win with long-term consequences. While I believe that most aspiring DevOps engineers will start here, more intentional design should be considered when building a pipeline.

Who are the consumers of your DevOps Pipeline?

Consider who is going to be consuming the pipeline that is being created. Some organizations want manual approvals, some want automated approvals, some are a bit more cowboy and approvals are not part of the conversation. Sometimes a QA team, project/program management, developers, executives, or the lunch lady could be consumers of your pipeline. Consider them when designing what you will use for delivery.

What are the goals of your DevOps Pipeline?

Not everyone is going to agree here, but when I am doing a design, I start by conceptualizing my end state much like pointing a ship in the direction that I think I want to go. This allows me to get more eyes on the concept that I am building toward while pulling in feedback from interested stakeholders. On the back of that work, I will take a step back and try to understand the strategic themes and goals of the pipeline to get more broad alignment from the consumers and stakeholders.

Just because someone tells you that they want something, doesn't mean that you heard them correctly or they fully understood what they are asking for. Try to take time and dissect their request and either align it to your design walking the requestor through how your solution solves their needs, or reframe your specific thinking of the problem to accommodate their request.

Is there a logical flow you can follow?

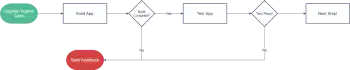

I like to map out my process as illustrated below.

By taking the time to understand the ins and outs of your pipeline, the decision tree that gets created, necessary supplemental steps, and points of failure you can design a logical flow that works across many different arenas. A single logical flow may work well to get code into a development environment, but do you really need to redo each and every step to move code to the next environment or can you adjust your logical flow to be less cumbersome?

Intentional Component Selection

Normally I am not a fan of Jenkins. I believe that it is allowing for quick and dirty instances of automation to be put out into the market in a way that does not promote stability. Don't get me wrong, Jenkins is an interesting tool if you look at it as a dumb task runner, but is a pretty poor tool if you are really taking automation seriously. Intentional component selection is a topic we should spend some time on due to the technology strongholds that are out in the market today.

So, who are the consumers of your DevOps Pipeline?

No no, this section is not a duplicate. When choosing your components, you need to consider your consumers. Luckily, most of your consumers in this space are going to be technology focused so we can hopefully ignore non-technical actors for the most part. If your team is primarily composed of PHP developers, it is probably not a good idea to try and pull in something which is well outside of the PHP ecosystem. Stick with tools that are similar to other existing tools in your environment.

Providing good feedback to your engineers is also something you should pay attention to. If your pipeline does happen to break for one reason or another, and the error messages are cryptic, you will end up in the "You built it you own it" paradigm. There is no democratization of the pipeline out to consumers to facilitate care and feeding, which we will discuss later. A good DevOps pipeline should support your engineers, not be an exercise in decoding the enigma machine.

Perfection is the enemy of progress!

Component selections can be a long drawn-out process. Spending too much time trying to decide will lead to the projects around you getting too far ahead of your efforts causing a lot of undo stress and rework. It is better to pick something and prove why it won't work over time instead of trying to find the magical purple unicorn which solves 200% of use cases.

Clearly defined single responsibilities!

To fight perfection shutting down progress, try to limit your components to single responsibilities. You can really apply SOLID OOD can really be applied anywhere in technology. By limiting your components to a single responsibility, you can set up your components as interfaces with each other. Component 1 expects this input and provides this output. The magic between input and output can be as complex as you want it to be as long as the inputs and outputs are consistent. You can then feed the outputs of component 1 into the inputs of component 2. Continue this pattern for N components.

What you end up with is a set of "jobs" which facilitate specific tasks. Each of these jobs is testable, improvable, observable, and most importantly; your jobs are understandable! If you want to break free from "you built it, you own it" then everything in your stack should be something that can be handed off to another flesh and blood human being who can pick up your work and run with it.

Care and Feeding

Any technology anywhere will need care and feeding. There is nothing that can be built and just runs like a perpetual motion machine. You will need to consider the care and feeding of your DevOps pipeline. Some of the care and feeding will be simple. Password rotations, library updates, edge case fixes, etc. can all be accomplished as part of the daily flow of work. More complex care and feeding such as component replacement, break glass in case of emergency, security hardening, etc. will need to be planned into your delivery lifecycle planning.

As a quick side tangent, when the term care and feeding comes up as part of normal conversation, does anyone else hear Ron Popeil and his overly excited audience say "Set it and forget it"?

Conclusion

As you can see, a stable DevOps pipeline will consist of many steps which all feed off of each other ending up in a GitOps-style deployment pipeline. I believe that the magic in any well-developed, well-set-up DevOps pipeline has a foundation in having a clear definition of your intentions, and intentional components which provide specific functionality, and each component needs attention + care and feeding like any other technology stack that is being supported. Not every pipeline needs to be as complex, or overly simplistic depending on your frame of reference since there is no one size fits all solution to robust delivery. Start small, but be intentional and you too can have a DevOps Pipeline Geared for Stability!